Data Science Blog

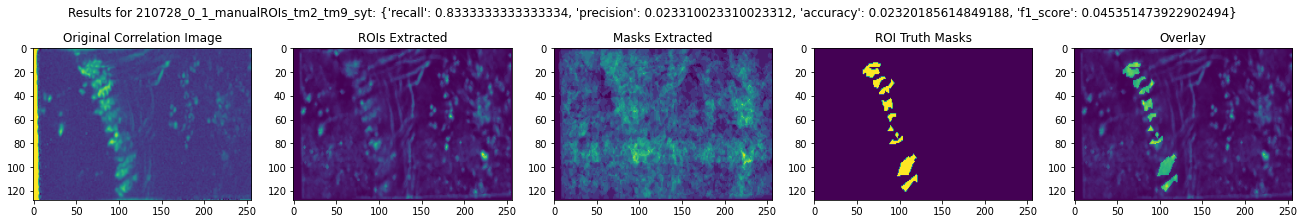

Neuroscientists often study the neuron function of fruit flies (drosophila) as a simplified substitute for more complicated brains (such as the human brain). There are many established tools for studying drosophila neural circuits, but most of the approaches involve studying one cell type at a time. Our approach captures multiple cell types, allowing us to study more of the network at once including any potential interactions.

Our project, a collaboration between a Stanford neuroscientist and University of Virginia Data Science utilizes the CaImAn python library from the Flatiron Institute to isolate neurons in recorded video. We then create a classification model to identify the neuron type, using shape of the neuron, reaction to stimulus, calcium deconvolution and other output from the CaImAn model. This project is still in progress.

This was a class project using a number of classification models from Logitic Regression to Random Forest to classify pixels as blue tarps or non blue tarps (indicating people in need).

Using Pyspark, my group and I reviewed Reddit data to answer a number of questions. The primary purpose of this assignment was to flex our muscles in pyspark. To test our limits we set an overly ambitious goal of taking the first 10 million rows of redit comments from May 2015. We had three research questions:

- Can we predict sentiment using NLP of reddit comments?

- Can we predict which subreddit a comment came from based on its text and some metadata features?

- Can we help predict post removal using tagged user flairs?

We sampled and manipulated data within spark and then fed the data into model pipelines to get our results.

For my portion of the project, I focused on the second question. To start I analyzed the data, selected the top 100 subreddits (before the number of comments hit a large drop off point) used word2vec to incorporate the text of the comment a long with the other metadata already present, and then fed it into a random forest model.

It was immediately clear we didn't have nearly enough memory available to approach this research question (if this is even a possible question to answer at this scale). However, the pipeline we created was ready to handle any ammount of data we had the resources to thow at it.

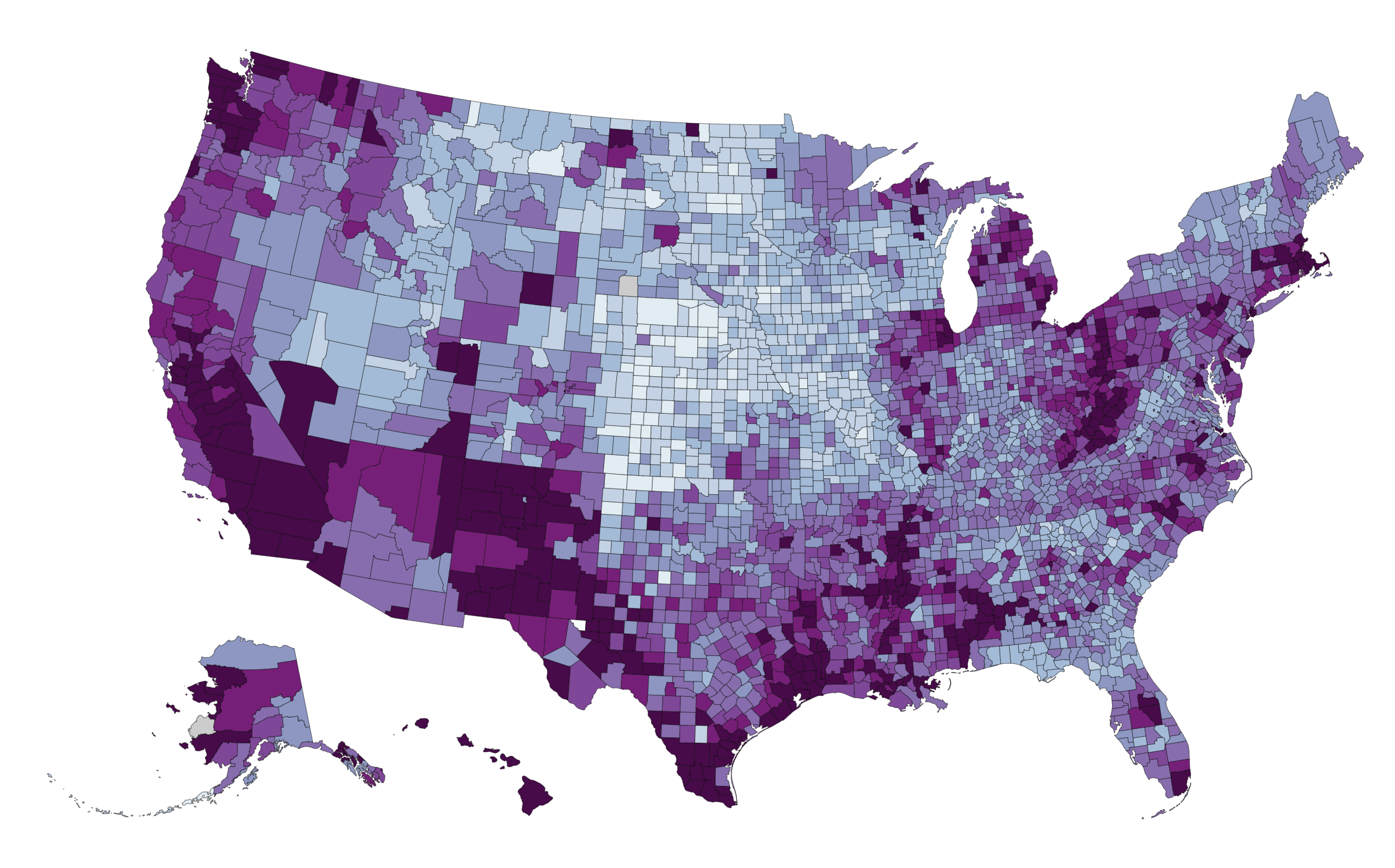

Visualizing and communicating data is extremely important in data science and is often misleading. During the 2020 election, the general public was flooded with maps showing predicted voting results. However, the vast majority of these focused on geography rather than population or even number of electors.

Here is my attempt at a method to visualize population more accurately (at the county rather than the state level) for voting, unemployment and population data. I created this interactive graphic using D3.js and with python to pull in and clean US Census, MIT Election Lab, and Bureau of Labor Statistics data.

This project was for my Exploratory Text Analytics class, an NLP class with a strong humanities bent. My goal for this project was to explore the difference between Conan Doyle's Sherlock Holmes works and the other works in his full corpus. I found that Arthur Conan Doyle's works all share a similar style making word frequency based techniques difficult to differentiate between Sherlock Holems and Non-Sherlock Holmes writtings. However, tree-based models, relying on TFIDF, were much more successful with the right parameters. If our goal is to predict whether a book by Conan Doyle is a story about Sherlock Holmes, then a tree-based method (like the yule model) is probably the way to go.

To create this corpus, I modified an existing function to pull in all of Conan Doyle's work on Project Geutenburg, eliminate duplicates and foreign language transaltions. Next I created several functions to break down each work into a workable OHCO format (Ordered Hierarchy of Content Objects) which could then be tonkenized. I applied several techniques to see where I could spot differences between the two groups (PCA, Word2Vec, tree-based models, topic modeling, and plot comparison using the Syuzhet model).

I've always been a fan of storytelling in different mediums. As a fun coding exercise I decided to create a text based animation in terminal generated by running a python script full of print statements. After dealing with complex python libraries, I love how clean it is to run simple code on terminal. As long as you have python installed (and your window is big enough) it will look the same for everyone.